|

|

|

|

Next: 4.4 MITgcm execution under

Up: 4.3 Using the WRAPPER

Previous: 4.3.2 Starting the code

Contents

Subsections

4.3.3 Controlling communication

The WRAPPER maintains internal information that is used for communication

operations and that can be customized for different platforms. This section

describes the information that is held and used.

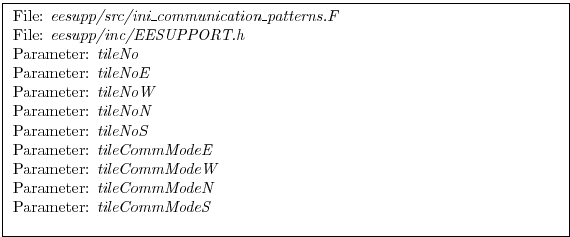

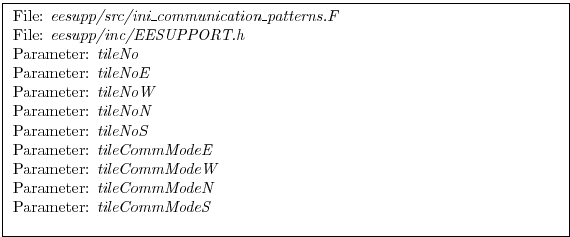

- Tile-tile connectivity information

For each tile the WRAPPER sets a flag that sets the tile number to

the north, south, east and west of that tile. This number is unique

over all tiles in a configuration. Except when using the cubed

sphere and the exch2 package, the number is held in the variables

tileNo ( this holds the tiles own number), tileNoN, tileNoS, tileNoE and tileNoW. A parameter is also

stored with each tile that specifies the type of communication that

is used between tiles. This information is held in the variables

tileCommModeN, tileCommModeS, tileCommModeE and

tileCommModeW. This latter set of variables can take one of

the following values COMM_NONE, COMM_MSG, COMM_PUT and COMM_GET. A value of COMM_NONE is

used to indicate that a tile has no neighbor to communicate with on

a particular face. A value of COMM_MSG is used to indicate

that some form of distributed memory communication is required to

communicate between these tile faces (see section

4.2.7). A value of COMM_PUT or COMM_GET is used to indicate forms of shared

memory communication (see section

4.2.6). The COMM_PUT value

indicates that a CPU should communicate by writing to data

structures owned by another CPU. A COMM_GET value indicates

that a CPU should communicate by reading from data structures owned

by another CPU. These flags affect the behavior of the WRAPPER

exchange primitive (see figure 4.7).

The routine ini_communication_patterns() is responsible for

setting the communication mode values for each tile.

When using the cubed sphere configuration with the exch2 package,

the relationships between tiles and their communication methods are

set by the exch2 package and stored in different variables. See the

exch2 package documentation (6.2.4 for details.

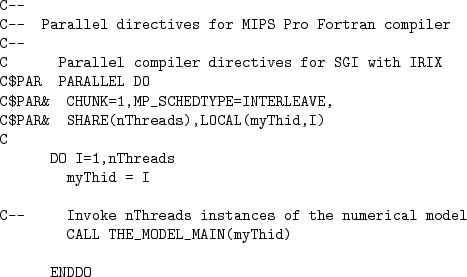

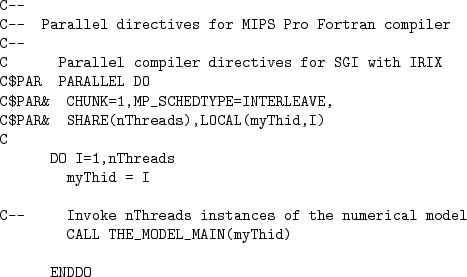

- MP directives

The WRAPPER transfers control to numerical application code through

the routine THE_MODEL_MAIN. This routine is called in a way

that allows for it to be invoked by several threads. Support for

this is based on either multi-processing (MP) compiler directives or

specific calls to multi-threading libraries (eg. POSIX

threads). Most commercially available Fortran compilers support the

generation of code to spawn multiple threads through some form of

compiler directives. Compiler directives are generally more

convenient than writing code to explicitly spawning threads. And,

on some systems, compiler directives may be the only method

available. The WRAPPER is distributed with template MP directives

for a number of systems.

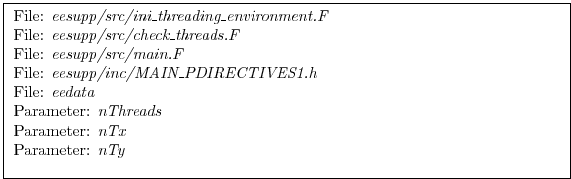

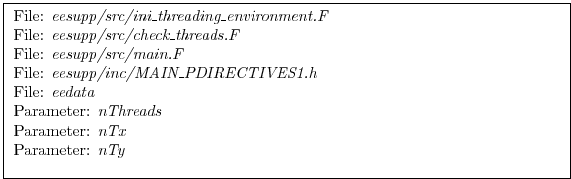

These directives are inserted into the code just before and after

the transfer of control to numerical algorithm code through the

routine THE_MODEL_MAIN. Figure 4.12 shows

an example of the code that performs this process for a Silicon

Graphics system. This code is extracted from the files main.F

and MAIN_PDIRECTIVES1.h. The variable nThreads

specifies how many instances of the routine THE_MODEL_MAIN

will be created. The value of nThreads is set in the routine

INI_THREADING_ENVIRONMENT. The value is set equal to the the

product of the parameters nTx and nTy that are read from

the file eedata. If the value of nThreads is

inconsistent with the number of threads requested from the operating

system (for example by using an environment variable as described in

section 4.3.2.1) then usually an error

will be reported by the routine CHECK_THREADS.

- memsync flags

As discussed in section 4.2.6.1, a low-level

system function may be need to force memory consistency on some

shared memory systems. The routine MEMSYNC() is used for this

purpose. This routine should not need modifying and the information

below is only provided for completeness. A logical parameter exchNeedsMemSync set in the routine INI_COMMUNICATION_PATTERNS() controls whether the MEMSYNC() primitive is called. In general this routine is only

used for multi-threaded execution. The code that goes into the MEMSYNC() routine is specific to the compiler and processor used.

In some cases, it must be written using a short code snippet of

assembly language. For an Ultra Sparc system the following code

snippet is used

asm("membar #LoadStore|#StoreStore");

for an Alpha based system the equivalent code reads

asm("mb");

while on an x86 system the following code is required

asm("lock; addl $0,0(%%esp)": : :"memory")

- Cache line size

As discussed in section 4.2.6.2,

milti-threaded codes explicitly avoid penalties associated with

excessive coherence traffic on an SMP system. To do this the shared

memory data structures used by the GLOBAL_SUM, GLOBAL_MAX and BARRIER routines are padded. The variables

that control the padding are set in the header file EEPARAMS.h. These variables are called cacheLineSize, lShare1, lShare4 and lShare8. The default values

should not normally need changing.

- _BARRIER

This is a CPP macro that is expanded to a call to a routine which

synchronizes all the logical processors running under the WRAPPER.

Using a macro here preserves flexibility to insert a specialized

call in-line into application code. By default this resolves to

calling the procedure BARRIER(). The default setting for the

_BARRIER macro is given in the file CPP_EEMACROS.h.

- _GSUM

This is a CPP macro that is expanded to a call to a routine which

sums up a floating point number over all the logical processors

running under the WRAPPER. Using a macro here provides extra

flexibility to insert a specialized call in-line into application

code. By default this resolves to calling the procedure GLOBAL_SUM_R8() ( for 64-bit floating point operands) or GLOBAL_SUM_R4() (for 32-bit floating point operands). The

default setting for the _GSUM macro is given in the file CPP_EEMACROS.h. The _GSUM macro is a performance critical

operation, especially for large processor count, small tile size

configurations. The custom communication example discussed in

section 4.3.3.2 shows how the macro is used to invoke

a custom global sum routine for a specific set of hardware.

- _EXCH

The _EXCH CPP macro is used to update tile overlap regions. It is

qualified by a suffix indicating whether overlap updates are for

two-dimensional ( _EXCH_XY ) or three dimensional ( _EXCH_XYZ )

physical fields and whether fields are 32-bit floating point (

_EXCH_XY_R4, _EXCH_XYZ_R4 ) or 64-bit floating point (

_EXCH_XY_R8, _EXCH_XYZ_R8 ). The macro mappings are defined in

the header file CPP_EEMACROS.h. As with _GSUM, the _EXCH

operation plays a crucial role in scaling to small tile, large

logical and physical processor count configurations. The example in

section 4.3.3.2 discusses defining an optimized and

specialized form on the _EXCH operation.

The _EXCH operation is also central to supporting grids such as the

cube-sphere grid. In this class of grid a rotation may be required

between tiles. Aligning the coordinate requiring rotation with the

tile decomposition, allows the coordinate transformation to be

embedded within a custom form of the _EXCH primitive. In these

cases _EXCH is mapped to exch2 routines, as detailed in the exch2

package documentation 6.2.4.

- Reverse Mode

The communication primitives _EXCH and _GSUM both employ

hand-written adjoint forms (or reverse mode) forms. These reverse

mode forms can be found in the source code directory pkg/autodiff. For the global sum primitive the reverse mode form

calls are to GLOBAL_ADSUM_R4 and GLOBAL_ADSUM_R8.

The reverse mode form of the exchange primitives are found in

routines prefixed ADEXCH. The exchange routines make calls to

the same low-level communication primitives as the forward mode

operations. However, the routine argument simulationMode is

set to the value REVERSE_SIMULATION. This signifies to the

low-level routines that the adjoint forms of the appropriate

communication operation should be performed.

- MAX_NO_THREADS

The variable MAX_NO_THREADS is used to indicate the maximum

number of OS threads that a code will use. This value defaults to

thirty-two and is set in the file EEPARAMS.h. For single

threaded execution it can be reduced to one if required. The value

is largely private to the WRAPPER and application code will not

normally reference the value, except in the following scenario.

For certain physical parametrization schemes it is necessary to have

a substantial number of work arrays. Where these arrays are

allocated in heap storage (for example COMMON blocks) multi-threaded

execution will require multiple instances of the COMMON block data.

This can be achieved using a Fortran 90 module construct. However,

if this mechanism is unavailable then the work arrays can be extended

with dimensions using the tile dimensioning scheme of nSx and

nSy (as described in section

4.3.1). However, if the

configuration being specified involves many more tiles than OS

threads then it can save memory resources to reduce the variable

MAX_NO_THREADS to be equal to the actual number of threads

that will be used and to declare the physical parameterization work

arrays with a single MAX_NO_THREADS extra dimension. An

example of this is given in the verification experiment aim.5l_cs. Here the default setting of MAX_NO_THREADS is

altered to

INTEGER MAX_NO_THREADS

PARAMETER ( MAX_NO_THREADS = 6 )

and several work arrays for storing intermediate calculations are

created with declarations of the form.

common /FORCIN/ sst1(ngp,MAX_NO_THREADS)

This declaration scheme is not used widely, because most global data

is used for permanent not temporary storage of state information.

In the case of permanent state information this approach cannot be

used because there has to be enough storage allocated for all tiles.

However, the technique can sometimes be a useful scheme for reducing

memory requirements in complex physical parameterizations.

Figure 4.12:

Prior to transferring control to the procedure THE_MODEL_MAIN() the WRAPPER may use MP directives to spawn

multiple threads.

|

The isolation of performance critical communication primitives and the

sub-division of the simulation domain into tiles is a powerful tool.

Here we show how it can be used to improve application performance and

how it can be used to adapt to new griding approaches.

4.3.3.2 JAM example

On some platforms a big performance boost can be obtained by binding

the communication routines _EXCH and _GSUM to

specialized native libraries (for example, the shmem library on CRAY

T3E systems). The LETS_MAKE_JAM CPP flag is used as an

illustration of a specialized communication configuration that

substitutes for standard, portable forms of _EXCH and _GSUM. It affects three source files eeboot.F, CPP_EEMACROS.h and cg2d.F. When the flag is defined is has

the following effects.

- An extra phase is included at boot time to initialize the custom

communications library ( see ini_jam.F).

- The _GSUM and _EXCH macro definitions are replaced

with calls to custom routines (see gsum_jam.F and exch_jam.F)

- a highly specialized form of the exchange operator (optimized

for overlap regions of width one) is substituted into the elliptic

solver routine cg2d.F.

Developing specialized code for other libraries follows a similar

pattern.

4.3.3.3 Cube sphere communication

Actual _EXCH routine code is generated automatically from a

series of template files, for example exch_rx.template. This

is done to allow a large number of variations on the exchange process

to be maintained. One set of variations supports the cube sphere grid.

Support for a cube sphere grid in MITgcm is based on having each face

of the cube as a separate tile or tiles. The exchange routines are

then able to absorb much of the detailed rotation and reorientation

required when moving around the cube grid. The set of _EXCH

routines that contain the word cube in their name perform these

transformations. They are invoked when the run-time logical parameter

useCubedSphereExchange is set true. To facilitate the

transformations on a staggered C-grid, exchange operations are defined

separately for both vector and scalar quantities and for grid-centered

and for grid-face and grid-corner quantities. Three sets of exchange

routines are defined. Routines with names of the form exch_rx

are used to exchange cell centered scalar quantities. Routines with

names of the form exch_uv_rx are used to exchange vector

quantities located at the C-grid velocity points. The vector

quantities exchanged by the exch_uv_rx routines can either be

signed (for example velocity components) or un-signed (for example

grid-cell separations). Routines with names of the form exch_z_rx are used to exchange quantities at the C-grid vorticity

point locations.

Next: 4.4 MITgcm execution under

Up: 4.3 Using the WRAPPER

Previous: 4.3.2 Starting the code

Contents

mitgcm-support@mitgcm.org

| Copyright © 2006

Massachusetts Institute of Technology |

Last update 2018-01-23 |

|

|