|

|

|

|

Next: 5.2.1 General setup

Up: 5. Automatic Differentiation

Previous: 5.1.3 Storing vs. recomputation

Contents

5.2 TLM and ADM generation in general

In this section we describe in a general fashion

the parts of the code that are relevant for automatic

differentiation using the software tool TAF.

Modifications to use OpenAD are described in 5.5.

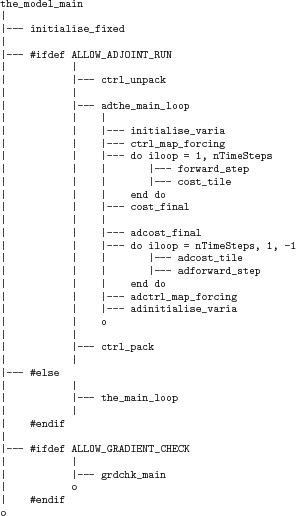

Figure 5.2:

|

The basic flow is depicted in 5.2.

If CPP option ALLOW_AUTODIFF_TAMC is defined,

the driver routine

the_model_main, instead of calling the_main_loop,

invokes the adjoint of this routine, adthe_main_loop

(case #define ALLOW_ADJOINT_RUN), or

the tangent linear of this routine g_the_main_loop

(case #define ALLOW_TANGENTLINEAR_RUN),

which are the toplevel routines in terms of automatic differentiation.

The routines adthe_main_loop or g_the_main_loop

are generated by TAF.

It contains both the forward integration of the full model, the

cost function calculation,

any additional storing that is required for efficient checkpointing,

and the reverse integration of the adjoint model.

[DESCRIBE IN A SEPARATE SECTION THE WORKING OF THE TLM]

In Fig. 5.2

the structure of adthe_main_loop has been strongly

simplified to focus on the essentials; in particular, no checkpointing

procedures are shown here.

Prior to the call of adthe_main_loop, the routine

ctrl_unpack is invoked to unpack the control vector

or initialise the control variables.

Following the call of adthe_main_loop,

the routine ctrl_pack

is invoked to pack the control vector

(cf. Section 5.2.5).

If gradient checks are to be performed, the option

ALLOW_GRADIENT_CHECK is defined. In this case

the driver routine grdchk_main is called after

the gradient has been computed via the adjoint

(cf. Section 5.3).

Subsections

Next: 5.2.1 General setup

Up: 5. Automatic Differentiation

Previous: 5.1.3 Storing vs. recomputation

Contents

mitgcm-support@mitgcm.org

| Copyright © 2006

Massachusetts Institute of Technology |

Last update 2018-01-23 |

|

|