|

|

|||||||||||

|

|

|||||||||||

|

|

|||||||||||

|

Next: 4.3.3 Controlling communication Up: 4.3 Using the WRAPPER Previous: 4.3.1 Specifying a domain Contents Subsections

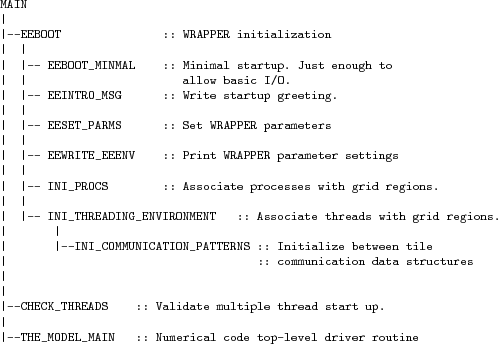

When code is started under the WRAPPER, execution begins in a main routine eesupp/src/main.F that is owned by the WRAPPER. Control is transferred

to the application through a routine called THE_MODEL_MAIN()

once the WRAPPER has initialized correctly and has created the necessary variables

to support subsequent calls to communication routines

by the application code. The startup calling sequence followed by the

WRAPPER is shown in figure 4.11.

|

|

4.3.2.1 Multi-threaded execution

Prior to transferring control to the procedure THE_MODEL_MAIN() the

WRAPPER may cause several coarse grain threads to be initialized. The routine

THE_MODEL_MAIN() is called once for each thread and is passed a single

stack argument which is the thread number, stored in the

variable myThid. In addition to specifying a decomposition with

multiple tiles per process ( see section 4.3.1)

configuring and starting a code to run using multiple threads requires the following

steps.

4.3.2.1.1 Compilation

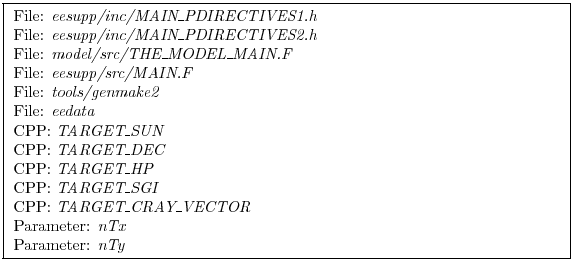

First the code must be compiled with appropriate multi-threading directives active in the file main.F and with appropriate compiler flags to request multi-threading support. The header files MAIN_PDIRECTIVES1.h and MAIN_PDIRECTIVES2.h contain directives compatible with compilers for Sun, Compaq, SGI, Hewlett-Packard SMP systems and CRAY PVP systems. These directives can be activated by using compile time directives -DTARGET_SUN, -DTARGET_DEC, -DTARGET_SGI, -DTARGET_HP or -DTARGET_CRAY_VECTOR respectively. Compiler options for invoking multi-threaded compilation vary from system to system and from compiler to compiler. The options will be described in the individual compiler documentation. For the Fortran compiler from Sun the following options are needed to correctly compile multi-threaded code

-stackvar -explicitpar -vpara -noautopar

These options are specific to the Sun compiler. Other compilers

will use different syntax that will be described in their

documentation. The effect of these options is as follows

- -stackvar Causes all local variables to be allocated in stack

storage. This is necessary for local variables to ensure that they are private

to their thread. Note, when using this option it may be necessary to override

the default limit on stack-size that the operating system assigns to a process.

This can normally be done by changing the settings of the command shells

stack-size limit variable. However, on some systems changing this limit

will require privileged administrator access to modify system parameters.

- -explicitpar Requests that multiple threads be spawned

in response to explicit directives in the application code. These

directives are inserted with syntax appropriate to the particular target

platform when, for example, the -DTARGET_SUN flag is selected.

- -vpara This causes the compiler to describe the multi-threaded

configuration it is creating. This is not required

but it can be useful when trouble shooting.

- -noautopar This inhibits any automatic multi-threaded

parallelization the compiler may otherwise generate.

An example of valid settings for the eedata file for a domain with two subdomains in y and running with two threads is shown below

nTx=1,nTy=2This set of values will cause computations to stay within a single thread when moving across the nSx sub-domains. In the y-direction, however, sub-domains will be split equally between two threads.

4.3.2.1.2 Multi-threading files and parameters

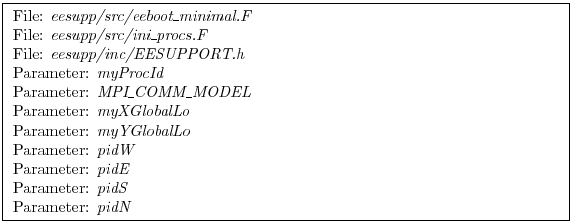

The following files and variables are used in setting up multi-threaded execution.

4.3.2.2 Multi-process execution

Despite its appealing programming model, multi-threaded execution remains less common than multi-process execution. One major reason for this is that many system libraries are still not ``thread-safe''. This means that, for example, on some systems it is not safe to call system routines to perform I/O when running in multi-threaded mode (except, perhaps, in a limited set of circumstances). Another reason is that support for multi-threaded programming models varies between systems.

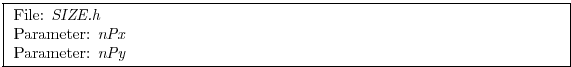

Multi-process execution is more ubiquitous. In order to run code in a multi-process configuration a decomposition specification (see section 4.3.1) is given (in which the at least one of the parameters nPx or nPy will be greater than one) and then, as for multi-threaded operation, appropriate compile time and run time steps must be taken.

4.3.2.2.1 Compilation

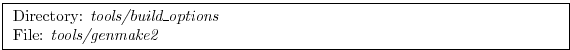

Multi-process execution under the WRAPPER assumes that the portable, MPI libraries are available for controlling the start-up of multiple processes. The MPI libraries are not required, although they are usually used, for performance critical communication. However, in order to simplify the task of controlling and coordinating the start up of a large number (hundreds and possibly even thousands) of copies of the same program, MPI is used. The calls to the MPI multi-process startup routines must be activated at compile time. Currently MPI libraries are invoked by specifying the appropriate options file with the -of flag when running the genmake2 script, which generates the Makefile for compiling and linking MITgcm. (Previously this was done by setting the ALLOW_USE_MPI and ALWAYS_USE_MPI flags in the CPP_EEOPTIONS.h file.) More detailed information about the use of genmake2 for specifying local compiler flags is located in section 3.4.2.

4.3.2.2.2 Execution

The mechanics of starting a program in multi-process mode under MPI is not standardized. Documentation associated with the distribution of MPI installed on a system will describe how to start a program using that distribution. For the open-source MPICH system, the MITgcm program can be started using a command such asmpirun -np 64 -machinefile mf ./mitgcmuvIn this example the text -np 64 specifies the number of processes that will be created. The numeric value 64 must be equal to the product of the processor grid settings of nPx and nPy in the file SIZE.h. The parameter mf specifies that a text file called ``mf'' will be read to get a list of processor names on which the sixty-four processes will execute. The syntax of this file is specified by the MPI distribution.

4.3.2.2.3 Environment variables

On most systems multi-threaded execution also requires the setting of a special environment variable. On many machines this variable is called PARALLEL and its values should be set to the number of parallel threads required. Generally the help or manual pages associated with the multi-threaded compiler on a machine will explain how to set the required environment variables.

4.3.2.2.4 Runtime input parameters

Finally the file eedata needs to be configured to indicate the number of threads to be used in the x and y directions. The variables nTx and nTy in this file are used to specify the information required. The product of nTx and nTy must be equal to the number of threads spawned i.e. the setting of the environment variable PARALLEL. The value of nTx must subdivide the number of sub-domains in x (nSx) exactly. The value of nTy must subdivide the number of sub-domains in y (nSy) exactly. The multiprocess startup of the MITgcm executable mitgcmuv is controlled by the routines EEBOOT_MINIMAL() and INI_PROCS(). The first routine performs basic steps required to make sure each process is started and has a textual output stream associated with it. By default two output files are opened for each process with names STDOUT.NNNN and STDERR.NNNN. The NNNNN part of the name is filled in with the process number so that process number 0 will create output files STDOUT.0000 and STDERR.0000, process number 1 will create output files STDOUT.0001 and STDERR.0001, etc. These files are used for reporting status and configuration information and for reporting error conditions on a process by process basis. The EEBOOT_MINIMAL() procedure also sets the variables myProcId and MPI_COMM_MODEL. These variables are related to processor identification are are used later in the routine INI_PROCS() to allocate tiles to processes.

Allocation of processes to tiles is controlled by the routine INI_PROCS(). For each process this routine sets the variables myXGlobalLo and myYGlobalLo. These variables specify, in

index space, the coordinates of the southernmost and westernmost

corner of the southernmost and westernmost tile owned by this process.

The variables pidW, pidE, pidS and pidN are

also set in this routine. These are used to identify processes holding

tiles to the west, east, south and north of a given process. These

values are stored in global storage in the header file EESUPPORT.h for use by communication routines. The above does not

hold when the exch2 package is used. The exch2 sets its own

parameters to specify the global indices of tiles and their

relationships to each other. See the documentation on the exch2

package (6.2.4) for details.

Next: 4.3.3 Controlling communication Up: 4.3 Using the WRAPPER Previous: 4.3.1 Specifying a domain Contents mitgcm-support@mitgcm.org